Introduction

Welcome to the Pywr book! Pywr is an open-source network resource allocation model. This book is a collection of documentation and tutorials for using Pywr. It should be read alongside the Pywr API documentation.

What is Pywr?

Pywr is a Rust crate and Python package for building and running water resource models. It allows users to construct models of water systems using a network of nodes and links, and other data. The model can then be used to simulate the operation of a water system, and to evaluate the performance of the system under different scenarios.

This version is a major update to the original Pywr model, which was written in Python and Cython. The new version is written in Rust, and uses Python bindings to expose the functionality to Python.

Installation

Pywr is both a Rust library and a Python package.

Rust

TBC

Python

Pywr requires Python 3.10 or later. It is currently available on PyPI as a pre-release.

Note: That current Pywr v2.x is in pre-release and may not be suitable for production use. If you require Pywr v1.x please use

pip install pywr<2.

Installing from PyPI (pre-release)

Using pip and venv

It is recommended to install Pywr into a virtual environment.

python -m venv .venv

source .venv/bin/activate # On Windows use `.venv\Scripts\activate`

pip install pywr --pre

Using uv

Alternatively, you can use uv to create and manage virtual environments:

uv init my-project

cd my-project

uv add pywr --pre

Installing from a wheel

Alternatively, wheels are available from the GitHub actions page. Navigate to the latest successful build, and download the archive and extract the wheel for your platform.

pip install pywr-2.0.0b0-cp310-abi3-win_amd64.whl

Checking the installation

To verify the installation, run to see the command line help:

python -m pywr --help

Running a model

Pywr is a modelling system for simulating water resources systems.

Models are defined using a JSON schema, and can be run using the pywr command line tool.

Below is an example of a simple model definition simple1.json:

{

"metadata": {

"title": "Simple 1",

"description": "A very simple example.",

"minimum_version": "0.1"

},

"timestepper": {

"start": "2015-01-01",

"end": "2015-12-31",

"timestep": {

"type": "Days",

"days": 1

}

},

"network": {

"nodes": [

{

"meta": {

"name": "supply1"

},

"type": "Input",

"max_flow": {

"type": "Literal",

"value": 15.0

}

},

{

"meta": {

"name": "link1"

},

"type": "Link"

},

{

"meta": {

"name": "demand1"

},

"type": "Output",

"max_flow": {

"type": "Parameter",

"name": "demand"

},

"cost": {

"type": "Literal",

"value": -10

}

}

],

"edges": [

{

"from_node": "supply1",

"to_node": "link1"

},

{

"from_node": "link1",

"to_node": "demand1"

}

],

"parameters": [

{

"meta": {

"name": "demand"

},

"type": "Constant",

"value": {

"type": "Literal",

"value": 10.0

}

}

],

"metric_sets": [

{

"name": "all",

"filters": {

"all_nodes": true,

"all_virtual_nodes": true,

"all_parameters": true,

"all_edges": true

}

}

],

"outputs": [

{

"name": "all",

"type": "Memory",

"metric_set": "all"

},

{

"name": "all-hdf5",

"type": "HDF5",

"filename": "outputs.h5",

"metric_set": "all"

}

]

}

}

To run the model, use the pywr command line tool:

python -m pywr run simple1.json

Related projects

Core concepts

The network

Parameters

Penalty costs

Reservoirs

Abstraction licences

Scenarios

Pywr has built-in support for running multiple scenarios. Scenarios are a way to define different sets of input data or parameters that can be used to run a model. This is often useful for running sensitivity analysis, stochastic hydrological data, or climate change scenarios. Pywr's scenario system is used to define a set of simulations that are, by default, all run in together. This requires that all scenarios simulate the same time period and have the same time step. However, it means that Pywr can take advantage of efficiencies by running through the same time-domain once. For example, the majority of the data required for the model can be loaded once and then shared between the scenarios. Pywr v2.x system is more flexible and crucially allows for scenarios to be run in parallel without the need for multiprocessing (which duplicates memory usage).

In this section, we will cover how to define scenarios in Pywr and how to run them.

Defining Scenarios

Scenarios are defined in the scenarios section of the model configuration file. A model can have multiple scenario

groups, each defining a set of scenarios. By default, Pywr will run the full combination of all scenarios in all

groups. If no scenarios are defined, Pywr will run a single scenario.

The simplest scenario definition contains a groups list of a single scenario group with a name and size. The following

example defines such scenario domain with a single group containing 5 scenarios. If this Pywr model is run, it will

run 5 scenarios.

{

"groups": [

{

"name": "Scenario A",

"size": 5

}

]

}

By default, the scenarios in a group will be given a numeric label starting from 0. However, it is possible to define

a labels list to give scenarios more meaningful names. The following example defines a scenario group with 5

scenarios using Roman numerals as labels.

{

"groups": [

{

"name": "Scenario A",

"size": 5,

"labels": [

"I",

"II",

"III",

"IV",

"V"

]

}

]

}

Additional scenario groups can be defined by adding them to the groups list. The following example

groups, "A" and "B", with sizes 5 and 3 respectively. This domain would create 15 simulations.

{

"groups": [

{

"name": "Scenario A",

"size": 5,

"labels": [

"I",

"II",

"III",

"IV",

"V"

]

},

{

"name": "Scenario B",

"size": 3

}

]

}

Running subsets of scenarios

It is often useful to run only a subset of the scenarios defined in a model. This can be done by either specifying the specific scenarios in each group to run, or by providing specific combinations of scenarios to run.

Note: These approaches are mutually exclusive.

Subsetting groups

To run only a subset of scenarios in a group, the subset key can be used. The following examples shows three

groups, each with 5 scenarios. The subset key is used to specify the scenarios to run in each group. The

first group is subset using the scenario group's labels, the second group is subset using the scenario group's

indices, and the third group is subset using a slice. In all cases the subset will mean the 2nd, 3rd and 4th scenarios

are run. This will result in 9 (3 x 3 x 3) simulations using the product of the subsets.

Note: The indices and slice are zero-based.

{

"groups": [

{

"name": "Scenario A",

"size": 5,

"labels": [

"I",

"II",

"III",

"IV",

"V"

],

"subset": {

"type": "Labels",

"labels": [

"II",

"III",

"IV"

]

}

},

{

"name": "Scenario B",

"size": 5,

"subset": {

"type": "Indices",

"indices": [

1,

2,

3

]

}

},

{

"name": "Scenario C",

"size": 5,

"subset": {

"type": "Slice",

"start": 1,

"end": 4

}

}

]

}

Specifying specific combinations

To run specific combinations of scenarios, the combinations key can be used. The following examples shows three

groups, each with 5 scenarios. The combinations key is used to specify the exact scenarios to run. Each combination

is a list of scenario indices to run. The example shows that the 1st, 3rd and 5th scenarios in each group are run.

This will result in 3 simulations.

{

"groups": [

{

"name": "Scenario A",

"size": 5,

"labels": [

"I",

"II",

"III",

"IV",

"V"

]

},

{

"name": "Scenario B",

"size": 5

},

{

"name": "Scenario C",

"size": 5

}

],

"combinations": [

[

"I",

0,

0

],

[

"III",

2,

2

],

[

"V",

4,

4

]

]

}

Input data

External data

Providing data to your Pywr model is essential. While some information can be encoded as constants or other values in the JSON, most real-world models require external data, such as time series or lookup tables. Pywr supports loading data from CSV files using data tables, which can provide both scalar and array values to parameters and nodes. Data tables allow flexible lookup schemes, including row-based, column-based, and combined row/column indexing.

Scalar Data Tables

Scalar data tables provide single constant values indexed by rows and/or columns. Using a data table might allow you to avoid hardcoding values in your model JSON, making it easier to update and manage. For example, you might have a data table that provides asset capacities, and separate table for asset costs. By swapping out the CSV files, you can easily change the model's parameters without modifying the JSON. However, this can make the model less transparent, as the values are not directly visible in the JSON.

Note: Currently, Pywr supports up to 4 keys for scalar data tables. This means you can have up to 4 row indices, or a combination of row and column indices that total 4.

Row-based scalar data tables

Row-based scalar data tables use the row index to look up values. This is useful when you have a list of assets or parameters, and you want to assign a specific value to each one. For example, consider the following CSV file, " tbl-scalar-row.csv":

key,value

A,1.0

B,2.0

C,3.0

This table has two columns: key and value. The key column contains the row index, which can be any string or

number. The value column contains the corresponding value for each key. To use this table in your model, you would

define a table in your JSON, and then reference it in a parameter, node, etc. For example, to load the above

table define the following in your model JSON:

{

"meta": {

"name": "scalar-row"

},

"type": "Scalar",

"format": "CSV",

"lookup": {

"type": "Row",

"cols": 1

},

"url": "tbl-scalar-row.csv"

}

The JSON snippet above defines a table named scalar-row that loads data from the CSV file. It specifies that the

table contains a single row index, and that the table is expected to return a single scalar value. The actual header

values in the CSV file are not important, as long as the first column is used for the row index and the second column

contains the values. The table assumes that the first row contains the header, and the data starts from the second row.

Once the table is defined, you can reference it in a parameter. For example, to use the scalar-row table to provide

a value for a ConstantParameter, you would reference it for the value field. A table reference like this can be

used anywhere a Metric or ConstantValue is expected.

{

"meta": {

"name": "my-constant-C"

},

"type": "Constant",

"value": {

"type": "Table",

"table": "scalar-row",

"row": "C"

}

}

It can be useful to organise the data in a table with multiple keys. For example, you might have a table that provides different data for different assets. In this case, you can use one key for the asset and one key for the data type. For example, consider the following CSV file, "tbl-scalar-row-row.csv":

key1,key2,value

A,X,10.0

A,Y,11.0

B,X,20.0

B,Y,21.0

To use a value from this table in the model, it can be referenced in a similar way to the single-key table, but you need to provide both keys:

{

"meta": {

"name": "my-constant-A-Y"

},

"type": "Constant",

"value": {

"type": "Table",

"table": "scalar-row-row",

"row": [

"A",

"Y"

]

}

}

Column-based scalar data tables

Alternatively a column-based scalar data table can be used. Column-based scalar data tables use the column header to look up values. For example, consider the following CSV file, "tbl-scalar-col.csv":

A,B,C

1.0,2.0,3.0

This is similar to the row-based table, but the column headers are used as the keys. To use this table in your model,

you would define a table in your JSON, and then reference it in a parameter, node, etc. For example, to load the above

table define the following in your model JSON

{

"meta": {

"name": "scalar-col"

},

"type": "Scalar",

"format": "CSV",

"lookup": {

"type": "Col",

"rows": 1

},

"url": "tbl-scalar-col.csv"

}

When referencing a column-based table, you need to provide the column key. For example, to use the scalar-col table

to provide a value for a ConstantParameter, you would reference it for the value field, and provide the column key.

{

"meta": {

"name": "my-constant-B"

},

"type": "Constant",

"value": {

"type": "Table",

"table": "scalar-col",

"row": "B"

}

}

Row & column-based scalar data tables

Row & column-based scalar data tables use both row and column indices to look up values. This is useful when you have a matrix of values, and you want to assign a specific value to each combination of row and column. For example, consider the following CSV file, "tbl-scalar-row-col.csv":

key,🦀,🐍

A,1.0,2.0

B,3.0,4.0

To use this table in your model, you would define a table in your JSON, and then reference it in a parameter, node,

etc. For example, to load the above table define the following in your model JSON:

{

"meta": {

"name": "scalar-row-col"

},

"type": "Scalar",

"format": "CSV",

"lookup": {

"type": "Both",

"rows": 1,

"cols": 1

},

"url": "tbl-scalar-row-col.csv"

}

When referencing a row & column-based table, you need to provide both the row and column keys. For example, to use the

scalar-row-col table to provide a value for a ConstantParameter, you would reference it for the value field, and

provide both the row and column keys.

Note: This example uses an emoji (🐍) as a column key. While this is valid, it may cause issues with some software and libraries, and must be encoded correctly in the JSON (as shown).

{

"meta": {

"name": "my-constant-A-python"

},

"type": "Constant",

"value": {

"type": "Table",

"table": "scalar-row-col",

"row": "A",

"column": "\uD83D\uDC0D"

}

}

Array Data Tables

Array data tables provide array values indexed by rows or columns. This is useful for certain types of parameters, such as monthly or daily profiles, which require an array of values. The following example shows how to define an array data table in CSV format with a single row index.

Note: Currently, Pywr supports up to 4 keys for array data tables. This means you can have up to 4 row or column indices.

month,1,2,3,4,5,6,7,8,9,10,11,12

profile1,0.5,0.5,0.5,0.5,0.5,0.5,0.5,0.5,0.5,0.5,0.5,0.5

profile2,0.6,0.7,0.75,0.8,0.75,0.7,0.7,0.6,0.6,0.6,0.6,0.6

profile3,0.7,0.8,0.9,0.9,0.9,0.8,0.8,0.75,0.7,0.7,0.65,0.65

To use this table in your model use "type": "Array" in the table definition in your JSON, as shown below.

{

"meta": {

"name": "array-row"

},

"type": "Array",

"format": "CSV",

"lookup": {

"type": "Row",

"cols": 1

},

"url": "tbl-array-row.csv"

}

The same data can be formatted with a column index instead of a row index, as shown below.

month,profile1,profile2,profile3

1,0.5,0.6,0.7

2,0.5,0.7,0.8

3,0.5,0.75,0.9

4,0.5,0.8,0.9

5,0.5,0.75,0.9

6,0.5,0.7,0.8

7,0.5,0.7,0.8

8,0.5,0.6,0.75

9,0.5,0.6,0.7

10,0.5,0.6,0.7

11,0.5,0.6,0.65

12,0.5,0.6,0.65

And the corresponding table definition in JSON:

{

"meta": {

"name": "array-col"

},

"type": "Array",

"format": "CSV",

"lookup": {

"type": "Col",

"rows": 1

},

"url": "tbl-array-col.csv"

}

Extending functionality with custom parameters

Parameters are a core part of Pywr, allowing you to define how your model behaves. While Pywr comes with a wide range of built-in parameters, you may find that you need to create custom parameters to suit your specific modelling needs. This guide will walk you through the process of creating custom parameters in Pywr.

Currently, Pywr supports custom parameters that are defined in Python. If your parameter is general enough, you may want to consider contributing it to the Pywr project. If you do, please see the Developers Guide for more information on how to do this.

Python functions

The simplest way to create a custom parameter is to define a Python function.

This function should accept at least one argument, which is a ParameterInfo object.

This object contains information from the model, such as the current time step, scenario index,

and any metric values that have been requested.

Additional arguments can also be passed to the function.

Here is an example of a simple custom parameter that returns the current time step:

# custom_parameters.py

from pywr import ParameterInfo

def current_time_step(info: ParameterInfo) -> float:

"""Return the current time step."""

return info.timestep.index

To use this custom parameter in your model it must be defined as a Parameter in your model's JSON file.

Below is an example of how to define the current_time_step parameter in your model's JSON file.

The source field specifies the path to the Python file containing the function,

and the object field specifies the name of the function to call.

{

"parameters": [

{

"meta": {

"name": "current-time-step"

},

"type": "Python",

"source": {

"type": "Path",

"path": "custom_parameters.py"

},

"object": {

"type": "Function",

"class": "current_time_step"

},

"args": [],

"kwargs": {}

}

]

}

Constant arguments

In reality, your function will likely need to accept additional arguments.

These arguments might be constants that change the behaviour of the function, but do not change over time

or are a result of the model's simulation state.

In this case they can be defined as args or kwargs in the parameter definition.

Only simple types that are supported by JSON can be used as arguments, such as strings, numbers, and booleans.

However, by parameterising these values, you can easily change them without modifying the Python code or reuse

the same function with different values in different parts of the model.

# custom_parameters.py

from pywr import ParameterInfo

def current_timestep(info: ParameterInfo, a: float, b: float, some_condition: str = "foo") -> float:

"""Return the current time step."""

match some_condition:

case "foo":

return info.timestep.index + a

case "bar":

return info.timestep.index + b

case _:

raise ValueError(f"Invalid condition: {some_condition}")

To pass these arguments to the function, you can define them in the model's JSON file as follows:

{

"parameters": [

{

"meta": {

"name": "current-time-step"

},

"type": "Python",

"source": {

"type": "Path",

"path": "custom_parameters.py"

},

"object": {

"type": "Function",

"class": "current_timestep"

},

"args": [

1.0,

2.0

],

"kwargs": {

"some_condition": "foo"

}

}

]

}

Metrics from the model

More complex parameters will need information from the model, such as the current volume of a reservoir, or

the value of another parameter, etc.

These values need to be requested in the JSON definition of parameter, and then they can be accessed in the function

using the ParameterInfo object.

# custom_parameters.py

from pywr import ParameterInfo

def factor_volume(info: ParameterInfo, factor: float) -> float:

"""Return the current volume of a reservoir scaled by `factor`."""

volume = info.get_metric("volume")

return factor * volume

The JSON definition of the parameter needs to include a metrics and/or indices field that specifies which model

metrics to request. Both fields are a dictionary where the keys are the keys used to retrieve the values from the

ParameterInfo object, and the values specify the metric to retrieve. Metrics are accessed using get_metric(key),

and indices are accessed using get_index(key).

{

"parameters": [

{

"meta": {

"name": "factor-volume"

},

"type": "Python",

"source": {

"type": "Path",

"path": "custom_parameters.py"

},

"object": {

"type": "Function",

"class": "factor_volume"

},

"args": [

2.0

],

"metrics": {

"volume": {

"type": "Node",

"name": "a-reservoir",

"attribute": "Volume"

}

}

}

]

}

Python classes & stateful parameters

If your parameter needs to maintain state between calls, you can define it as a Python class.

This class should implement an __init__ method that setups up the parameter, including any

initial state.

The __init__ method is passed the args and kwargs defined in the JSON file.

Pywr will create an instance of the class for every scenario in a simulation.

These instances will be reused for each time step in the scenario, allowing you to maintain state across time steps.

Note: Unlike Pywr v1.x a separate instance of the class is created for each scenario. This means you do not have to worry about state being shared between scenarios, and do not need to implement state for each scenario yourself.

The class should also implement calc method, which is called for each time step in the scenario.

This method should accept a ParameterInfo object as its only argument.

Finally, the class may also implement an after method, which is called after the resource allocation

has been completed for the time step.

This method can be used to perform any final calculations or updates to the parameter state.

Here is an example of a simple stateful parameter that counts the number of time steps:

# custom_parameters.py

from pywr import ParameterInfo

class TimeStepCounter:

"""A parameter that counts the number of time steps."""

def __init__(self, initial_value: int = 0):

self.count = initial_value

def calc(self, _info: ParameterInfo) -> float:

"""Return the current time step count."""

# Note that `_info` is not used, but it is required by the interface.

self.count += 1

return self.count

To use this custom parameter in your model, you can define it in the JSON file as follows:

{

"parameters": [

{

"meta": {

"name": "time-step-counter"

},

"type": "Python",

"source": {

"type": "Path",

"path": "custom_parameters.py"

},

"object": {

"type": "Class",

"class": "TimeStepCounter"

},

"args": [

0

],

"kwargs": {}

}

]

}

Using modules instead of files

It might be more convenient to define your custom parameters in a Python module instead of a file. This

allows you to integrate your custom parameters with other Python code, such as unit tests or other utility functions.

To do this, you can use the source field to specify the module name instead of a file path.

Here is an example of how to define a custom parameter in a module (in this case my_model.parameters):

{

"parameters": [

{

"meta": {

"name": "current-time-step"

},

"type": "Python",

"source": {

"type": "Module",

"module": "my_model.parameters"

},

"object": {

"type": "Function",

"class": "current_time_step"

},

"args": [],

"kwargs": {}

}

]

}

Returning integers or multiple values

In the examples above the custom parameter functions return a single floating point value.

However, you can also return integers or multiple values.

In the JSON definition of the parameter, you can specify the return_type field to indicate the type of value

the function will return.

To return an integer, you can set the return_type to "Int".

To return multiple values, you can set the return_type to "Dict" and the function should return a dictionary

where the keys are the names of the values and the values are the values themselves.

An example of a custom parameter that returns multiple values is shown below:

# custom_parameters.py

from pywr import ParameterInfo

def multiple_values(info: ParameterInfo, factor: float) -> dict:

"""Return multiple values."""

return {

"value1": info.timestep.index,

"value2": info.get_metric("volume") * factor

}

The corresponding JSON for this parameter would look like this:

{

"parameters": [

{

"meta": {

"name": "multiple_values"

},

"type": "Python",

"source": {

"type": "Module",

"module": "my_model.parameters"

},

"object": {

"type": "Function",

"class": "multiple_values"

},

"return_type": "Dict",

"args": [

2.0

],

"metrics": {

"volume": {

"type": "Node",

"name": "a-reservoir",

"attribute": "Volume"

}

}

}

]

}

And the returned values can be accessed in the model using the keys defined in the dictionary.

{

"type": "Parameter",

"name": "multiple_values",

"key": "value1"

// or "value2"

}

Cython (and other compiled languages)

Cython functions and classes can be used in Pywr as long as they accessible from Python, and can be imported by Pywr at runtime. In this case using a module for locating the custom parameter is recommended. Otherwise, there is no difference in how you define the custom parameter in the model's JSON file.

Other compiled languages can also be used, but you will need to ensure that the compiled code is accessible from Python.

This can be done by using a Python wrapper around the compiled code, or by using a foreign function interface (FFI)

such as ctypes or cffi.

River routing and attenuation

To account for flow attenuation and travel time in river reaches, Pywr includes a number of routing methods. Currently available methods are:

- Delay: Delay flow by a fixed number of time-steps

- Muskingum: Muskingum routing method

Delay routing

The delay routing method simply delays flow by a fixed number of time-steps. This can be implemented

using either DelayNode or RiverNode with a routing method of delay. Internally, the delay

is implemented using a DelayParameter which simply stores the flow values in a queue and returns

the value from the appropriate time-step in the past.

Muskingum routing

The Muskingum routing method is a widely used hydrological method for simulating the movement of flood waves through river channels. It is based on the principle of conservation of mass and momentum, and it uses a simple linear relationship to describe the storage and flow in a river reach. The implementation in Pywr is based on the following equation:

\[ O_t = \left(\frac{\Delta t - 2KX}{2K(1-X) + \Delta t}\right)I_t + \left(\frac{\Delta t + 2KX}{2K(1-X)+\Delta t}\right)I_{t-1} + \left(\frac{2K(1-X)-\Delta t}{2K(1-X)+\Delta t}\right)O_{t-1} \]

This relates the outflow of the reach at time t \( (O_t) \) to the inflow at time t \( (I_t) \) and the inflow and

outflow at the previous time step (\( I_{t-1} \) and \( O_{t-1} \) respectively).

This is implemented in Pywr using a MuskingumParameter which uses the above equation to calculate the factors in

an equality constraint of the form:

\[ O_t - aI_t = b \]

Where \( a \) is the coefficient for the current time-step, \( b \) is the sum of the coefficients for the previous time-step multiplied by their respective values.

Parameters

The Muskingum routing method requires two parameters:

- K: The storage time constant (in time-steps). This represents the time it takes for water to travel through the reach.

- X: The weighting factor (dimensionless between 0.0 and 0.5). This represents the relative importance of inflow and outflow in the reach.

The initial condition can also be specified by the user or set to "steady state". The former sets the initial inflow and outflow to the specified values, while the latter modifies the constraint to require that the inflow and outflow are equal at the first time-step.

See also the HEC-HMS documentation on the Muskingum method for a longer explanation of the parameters and the method.

Example

The easiest way to use Muskingum routing is to use a RiverNode with a routing method of Muskingum. This will create

a MuskingumParameter internally.

An example of a RiverNode with Muskingum routing is shown below:

{

"meta": {

"name": "reach1"

},

"type": "River",

"routing_method": {

"type": "Muskingum",

"travel_time": {

"type": "Constant",

"value": 1.1

},

"weight": {

"type": "Constant",

"value": 0.25

},

"initial_condition": {

"type": "SteadyState"

}

}

}

Migrating from Pywr v1.x

This guide is intended to help users of Pywr v1.x migrate to Pywr v2.x. Pywr v2.x is a complete rewrite of Pywr with a new API and new features. This guide will help you update your models to this new version.

Overview of the process

Pywr v2.x includes a more strict schema for defining models. This schema, along with the pywr-v1-schema crate, provide a way to convert models from v1.x to v2.x. However, this process is not perfect and will more than likely require manual intervention to complete the migration. The migration of larger and/or more complex models will require an iterative process of conversion and testing.

The overall process will follow these steps:

- Convert the JSON from v1.x to v2.x using the provided conversion tool.

- Handle any errors or warnings from the conversion tool.

- Apply any other manual changes to the converted JSON.

- (Optional) Save the converted JSON as a new file.

- Load and run the new JSON file in Pywr v2.x.

- Compare model outputs to ensure it behaves as expected. If necessary, make further changes to the above process and repeat.

Converting a model

The example below is a basic script that demonstrates how to convert a v1.x model to v2.x. This process converts the model at runtime, and does not replace the existing v1.x model with a v2.x definition.

Note: This example is meant to be a starting point for users to build their own conversion process; it is not a complete generic solution.

The function in the listing below is an example of the overall conversion process. The function takes a path to a JSON file containing a v1 Pywr model, and then converts it to v2.x.

- The function reads the JSON, and applies the conversion function (

convert_model_from_v1_json_string). - The conversion function that takes a JSON string and returns a tuple of the converted JSON string and a list of errors.

- The function then handles these errors using the

handle_conversion_errorfunction. - After the errors are handled other arbitrary changes are applied using the

patch_modelfunction. - Finally, the converted JSON can be saved to a new file and run using Pywr v2.x.

from pywr import (

convert_model_from_v1_json_string,

ComponentConversionError,

ConversionError,

ModelSchema,

)

def convert(v1_path: Path):

with open(v1_path) as fh:

v1_model_str = fh.read()

# 1. Convert the v1 model to a v2 schema

schema, errors = convert_model_from_v1_json_string(v1_model_str)

schema_data = json.loads(schema.to_json_string())

# 2. Handle any conversion errors

for error in errors:

handle_conversion_error(error, schema_data)

# 3. Apply any other manual changes to the converted JSON.

patch_model(schema_data)

schema_data_str = json.dumps(schema_data, indent=4)

# 4. Save the converted JSON as a new file (uncomment to save)

# with open(v1_path.parent / "v2-model.json", "w") as fh:

# fh.write(schema_data_str)

print("Conversion complete; running model...")

# 5. Load and run the new JSON file in Pywr v2.x.

schema = ModelSchema.from_json_string(schema_data_str)

model = schema.build(Path(__file__).parent, None)

model.run("clp")

print("Model run complete 🎉")

Handling conversion errors

The convert_model_from_v1_json_string function returns a list of errors that occurred during the conversion process.

These errors can be handled in a variety of ways, such as modifying the model definition, raising exceptions, or

ignoring them.

It is suggested to implement a function that can handle these errors in a way that is appropriate for your use case.

Begin by matching a few types of errors and then expand the matching as needed. By raising exceptions

for unhandled errors, you can ensure that all errors are eventually accounted for, and that new errors are not missed.

The example handles the ComponentConversionError by matching on the error subclass (either Parameter() or Node()),

and then handling each case separately.

These two classes will contain the name of the component and optionally the attribute that caused the error.

In addition, these types contain an inner error (ConversionError) that can be used to provide more detailed

information.

In the example, the UnrecognisedType() class is handled for Parameter() errors by applying the

handle_custom_parameters function.

This second function adds a Pywr v2.x compatible custom parameter to the model definition using the same name and type (class name) as the original parameter.

def handle_conversion_error(error: ComponentConversionError, schema_data):

"""Handle a schema conversion error.

Raises a `RuntimeError` if an unhandled error case is found.

"""

match error:

case ComponentConversionError.Parameter():

match error.error:

case ConversionError.UnrecognisedType() as e:

print(

f"Patching custom parameter of type {e.ty} with name {error.name}"

)

handle_custom_parameters(schema_data, error.name, e.ty)

case _:

raise RuntimeError(f"Other parameter conversion error: {error}")

case ComponentConversionError.Node():

raise RuntimeError(f"Failed to convert node `{error.name}`: {error.error}")

case _:

raise RuntimeError(f"Unexpected conversion error: {error}")

def handle_custom_parameters(schema_data, name: str, p_type: str):

"""Patch the v2 schema to add the custom parameter with `name` and `p_type`."""

# Ensure the network parameters is a list

if "parameters" not in schema_data["network"]:

schema_data["network"]["parameters"] = []

schema_data["network"]["parameters"].append(

{

"meta": {"name": name},

"type": "Python",

"source": {"type": "Path", "path": "v2_custom_parameter.py"},

"object": {

"type": "Class",

"class": p_type,

}, # Use the same class name in v1 & v2

"args": [],

"kwargs": {},

}

)

Other changes

The upgrade to v2.x may require other changes to the model.

For example, the conversion process does not currently handle recorders and other model outputs.

These will need to be manually added to the model definition.

Such manual changes can be applied using, for example a patch_model function.

This function will make arbitrary changes to the model definition.

The example, below updates the metadata of the model to modify the description.

def patch_model(schema_data):

"""Patch the v2 schema to add any additional changes."""

# Add any additional patches here

schema_data["metadata"]["description"] = "Converted from v1 model"

Full example

The complete example below demonstrates the conversion process for a v1.x model to v2.x:

import json

from pathlib import Path

# ANCHOR: convert

from pywr import (

convert_model_from_v1_json_string,

ComponentConversionError,

ConversionError,

ModelSchema,

)

def convert(v1_path: Path):

with open(v1_path) as fh:

v1_model_str = fh.read()

# 1. Convert the v1 model to a v2 schema

schema, errors = convert_model_from_v1_json_string(v1_model_str)

schema_data = json.loads(schema.to_json_string())

# 2. Handle any conversion errors

for error in errors:

handle_conversion_error(error, schema_data)

# 3. Apply any other manual changes to the converted JSON.

patch_model(schema_data)

schema_data_str = json.dumps(schema_data, indent=4)

# 4. Save the converted JSON as a new file (uncomment to save)

# with open(v1_path.parent / "v2-model.json", "w") as fh:

# fh.write(schema_data_str)

print("Conversion complete; running model...")

# 5. Load and run the new JSON file in Pywr v2.x.

schema = ModelSchema.from_json_string(schema_data_str)

model = schema.build(Path(__file__).parent, None)

model.run("clp")

print("Model run complete 🎉")

# ANCHOR_END: convert

# ANCHOR: handle_conversion_error

def handle_conversion_error(error: ComponentConversionError, schema_data):

"""Handle a schema conversion error.

Raises a `RuntimeError` if an unhandled error case is found.

"""

match error:

case ComponentConversionError.Parameter():

match error.error:

case ConversionError.UnrecognisedType() as e:

print(

f"Patching custom parameter of type {e.ty} with name {error.name}"

)

handle_custom_parameters(schema_data, error.name, e.ty)

case _:

raise RuntimeError(f"Other parameter conversion error: {error}")

case ComponentConversionError.Node():

raise RuntimeError(f"Failed to convert node `{error.name}`: {error.error}")

case _:

raise RuntimeError(f"Unexpected conversion error: {error}")

def handle_custom_parameters(schema_data, name: str, p_type: str):

"""Patch the v2 schema to add the custom parameter with `name` and `p_type`."""

# Ensure the network parameters is a list

if "parameters" not in schema_data["network"]:

schema_data["network"]["parameters"] = []

schema_data["network"]["parameters"].append(

{

"meta": {"name": name},

"type": "Python",

"source": {"type": "Path", "path": "v2_custom_parameter.py"},

"object": {

"type": "Class",

"class": p_type,

}, # Use the same class name in v1 & v2

"args": [],

"kwargs": {},

}

)

# ANCHOR_END: handle_conversion_error

# ANCHOR: patch_model

def patch_model(schema_data):

"""Patch the v2 schema to add any additional changes."""

# Add any additional patches here

schema_data["metadata"]["description"] = "Converted from v1 model"

# ANCHOR_END: patch_model

if __name__ == "__main__":

pth = Path(__file__).parent / "v1-model.json"

convert(pth)

Converting custom parameters

The main changes to custom parameters in Pywr v2.x are as follows:

- Custom parameters are no longer required to be a subclass of

Parameter. They instead can be simple Python functions, or classes that implement acalcmethod. - Users are no longer required to handle scenarios within custom parameters. Instead an instance of the custom parameter is created for each scenario in the simulation. This simplifies writing parameters and removes the risk of accidentally contaminating state between scenarios.

- Custom parameters are now added to the model using the "Python" parameter type. I.e. the "type" field in the parameter definition should be set to "Python" (not the class name of the custom parameter). This parameter type requires that the user explicitly define which metrics the custom parameter requires.

For more information on custom parameters, see the Custom parameters section of the documentation.

Simple example

v1.x custom parameter:

from pywr.parameters import ConstantParameter

class MyParameter(ConstantParameter):

def value(self, *args, **kwargs):

return 42

MyParameter.register()

v2.x custom parameter:

class MyParameter:

def calc(self, *args, **kwargs):

return 42

Developers Guide

This section is intended for developers who want to contribute to Pywr. It covers the following topics:

- Parameter types and traits

- Adding a new parameter

Parameter traits and return types

The pywr-core crate defines a number of traits that are used to implement parameters. These traits are used to define

the behaviour of the parameter and how it interacts with the model. Each parameter must implement the Parameter trait

and one of the three compute traits: GeneralParameter<T>, SimpleParameter<T>, or ConstParameter<T>.

The Parameter trait

The Parameter trait is the base trait for all parameters in Pywr. It defines the basic behaviour of the parameter and

how it interacts with the model. The minimum implementation requires returning the metadata for the parameter.

Additional methods can be implemented to provide additional functionality. Please refer to the documentation for

the Parameter trait for more information.

The GeneralParameter<T> trait

The GeneralParameter<T> trait is used for parameters that depend on MetricF64 values from the model. Because

MetricF64 values can refer to other parameters, general model state or other information implementing this

traits provides the most flexibility for a parameter. The compute method is used to calculate the value of the

parameter at a given timestep and scenario. This method is resolved in order with other model components such

as nodes.

The SimpleParameter<T> trait

The SimpleParameter<T> trait is used for parameters that depend on SimpleMetricF64 or ConstantMetricF64

values only, or no other values at all. The compute method is used to calculate the value of the parameter at a given

timestep and scenario, and therefore SimpleParameter<T> can vary with time. This method is resolved in order with

other SimpleParameter<T> before GeneralParameter<T> and other model components such as nodes.

The ConstParameter<T> trait

The ConstParameter<T> trait is used for parameters that depend on ConstantMetricF64 values only and do

not vary with time. The compute method is used to calculate the value of the parameter at the start of the simulation

and is not resolved at each timestep. This method is resolved in order with other ConstParameter<T>.

Implementing multiple traits

A parameter should implement the "lowest" trait in the hierarchy. For example, if a parameter depends on

a SimpleParameter<T> and a ConstParameter<T> value, it should implement the SimpleParameter<T> trait.

If a parameter depends on a GeneralParameter<T> and a ConstParameter<T> value, it should implement the

GeneralParameter<T> trait.

For some parameters it can be beneficial to implement multiple traits. For example, a parameter could be generic to the

metric type (e.g. MetricF64, SimpleMetricF64, or ConstantMetricF64) and implement each of the three

compute traits. This would allow the parameter to be used in the most efficient way possible depending on the

model configuration.

Return types

While the compute traits are generic over the type T, the return type of the compute Pywr currently only supports

f64, usize and MultiValue types. The MultiValue type is used to return multiple values from the compute

method. This is useful for parameters that return multiple values at a given timestep and scenario. See the

documentation for the MultiValue type for more information. Implementations of the compute traits are usually for one

of these concrete types.

Adding a new parameter to Pywr.

This guide explains how to add a new parameter to Pywr.

When to add a new parameter?

New parameters can be added to complement the existing parameters in Pywr. These parameters should be generic and reusable across a wide range of models. By adding them to Pywr itself other users are able to use them in their models without having to implement them themselves. They are also typically implemented in Rust, which means they are fast and efficient.

If the parameter is specific to a particular model or data set, it is better to implement it in the model itself

using a custom parameter.

Custom parameters can be added using, for example, the PythonParameter.

Adding a new parameter

To add new parameter to Pywr you need to do two things:

- Add the implementation to the

pywr-corecrate, and - Add the schema definition to the

pywr-schemacrate.

Adding the implementation to pywr-core

The implementation of the parameter should be added to the pywr-core crate.

This is typically done by adding a new module to the parameters module in the src directory.

It is a good idea to follow the existing structure of the parameters module by making a new module for the new

parameter.

Developers can follow the existing parameters as examples.

In this example, we will add a new parameter called MaxParameter that calculates the maximum value of a metric.

Parameters can depend on other parameters or values from the model via the MetricF64 type.

In this case the metric field stores a MetricF64 that will be compared with the threshold field

to calculate the maximum value.

The threshold is a constant value that is set when the parameter is created.

Finally, the meta field stores the metadata for the parameter.

The ParameterMeta struct is used to store the metadata for all parameters and can be reused.

#![allow(dead_code)]

use pywr_core::metric::MetricF64;

use pywr_core::network::Network;

use pywr_core::parameters::{

GeneralParameter, Parameter, ParameterCalculationError, ParameterMeta, ParameterName, ParameterState,

};

use pywr_core::scenario::ScenarioIndex;

use pywr_core::state::State;

use pywr_core::timestep::Timestep;

pub struct MaxParameter {

meta: ParameterMeta,

metric: MetricF64,

threshold: f64,

}

impl MaxParameter {

pub fn new(name: ParameterName, metric: MetricF64, threshold: f64) -> Self {

Self {

meta: ParameterMeta::new(name),

metric,

threshold,

}

}

}

impl Parameter for MaxParameter {

fn meta(&self) -> &ParameterMeta {

&self.meta

}

}

impl GeneralParameter<f64> for MaxParameter {

fn compute(

&self,

_timestep: &Timestep,

_scenario_index: &ScenarioIndex,

model: &Network,

state: &State,

_internal_state: &mut Option<Box<dyn ParameterState>>,

) -> Result<f64, ParameterCalculationError> {

// Current value

let x = self.metric.get_value(model, state)?;

Ok(x.max(self.threshold))

}

fn as_parameter(&self) -> &dyn Parameter

where

Self: Sized,

{

self

}

}

mod schema {

#[cfg(feature = "core")]

use pywr_core::parameters::ParameterIndex;

use pywr_schema::metric::Metric;

use pywr_schema::parameters::ParameterMeta;

#[cfg(feature = "core")]

use pywr_schema::{LoadArgs, SchemaError};

use schemars::JsonSchema;

#[derive(serde::Deserialize, serde::Serialize, Debug, Clone, JsonSchema)]

pub struct MaxParameter {

#[serde(flatten)]

pub meta: ParameterMeta,

pub parameter: Metric,

pub threshold: Option<f64>,

}

#[cfg(feature = "core")]

impl MaxParameter {

pub fn add_to_model(

&self,

network: &mut pywr_core::network::Network,

args: &LoadArgs,

) -> Result<ParameterIndex<f64>, SchemaError> {

let idx = self.parameter.load(network, args, Some(&self.meta.name))?;

let threshold = self.threshold.unwrap_or(0.0);

let p = pywr_core::parameters::MaxParameter::new(self.meta.name.as_str().into(), idx, threshold);

Ok(network.add_parameter(Box::new(p))?)

}

}

}

fn main() {

println!("Hello, world!");

}To allow the parameter to be used in the model it is helpful to add a new function that creates a new instance of the

parameter. This will be used by the schema to create the parameter when it is loaded from a model file.

#![allow(dead_code)]

use pywr_core::metric::MetricF64;

use pywr_core::network::Network;

use pywr_core::parameters::{

GeneralParameter, Parameter, ParameterCalculationError, ParameterMeta, ParameterName, ParameterState,

};

use pywr_core::scenario::ScenarioIndex;

use pywr_core::state::State;

use pywr_core::timestep::Timestep;

pub struct MaxParameter {

meta: ParameterMeta,

metric: MetricF64,

threshold: f64,

}

impl MaxParameter {

pub fn new(name: ParameterName, metric: MetricF64, threshold: f64) -> Self {

Self {

meta: ParameterMeta::new(name),

metric,

threshold,

}

}

}

impl Parameter for MaxParameter {

fn meta(&self) -> &ParameterMeta {

&self.meta

}

}

impl GeneralParameter<f64> for MaxParameter {

fn compute(

&self,

_timestep: &Timestep,

_scenario_index: &ScenarioIndex,

model: &Network,

state: &State,

_internal_state: &mut Option<Box<dyn ParameterState>>,

) -> Result<f64, ParameterCalculationError> {

// Current value

let x = self.metric.get_value(model, state)?;

Ok(x.max(self.threshold))

}

fn as_parameter(&self) -> &dyn Parameter

where

Self: Sized,

{

self

}

}

mod schema {

#[cfg(feature = "core")]

use pywr_core::parameters::ParameterIndex;

use pywr_schema::metric::Metric;

use pywr_schema::parameters::ParameterMeta;

#[cfg(feature = "core")]

use pywr_schema::{LoadArgs, SchemaError};

use schemars::JsonSchema;

#[derive(serde::Deserialize, serde::Serialize, Debug, Clone, JsonSchema)]

pub struct MaxParameter {

#[serde(flatten)]

pub meta: ParameterMeta,

pub parameter: Metric,

pub threshold: Option<f64>,

}

#[cfg(feature = "core")]

impl MaxParameter {

pub fn add_to_model(

&self,

network: &mut pywr_core::network::Network,

args: &LoadArgs,

) -> Result<ParameterIndex<f64>, SchemaError> {

let idx = self.parameter.load(network, args, Some(&self.meta.name))?;

let threshold = self.threshold.unwrap_or(0.0);

let p = pywr_core::parameters::MaxParameter::new(self.meta.name.as_str().into(), idx, threshold);

Ok(network.add_parameter(Box::new(p))?)

}

}

}

fn main() {

println!("Hello, world!");

}Finally, the minimum implementation of the Parameter and one of the three types of parameter compute traits should be

added for MaxParameter. These traits require the meta function to return the metadata for the parameter, and

the compute function to calculate the value of the parameter at a given timestep and scenario.

In this case the compute function calculates the maximum value of the metric and the threshold.

The value of the metric is obtained from the model using the get_value function.

See the documentation about parameter traits and return types for more information.

#![allow(dead_code)]

use pywr_core::metric::MetricF64;

use pywr_core::network::Network;

use pywr_core::parameters::{

GeneralParameter, Parameter, ParameterCalculationError, ParameterMeta, ParameterName, ParameterState,

};

use pywr_core::scenario::ScenarioIndex;

use pywr_core::state::State;

use pywr_core::timestep::Timestep;

pub struct MaxParameter {

meta: ParameterMeta,

metric: MetricF64,

threshold: f64,

}

impl MaxParameter {

pub fn new(name: ParameterName, metric: MetricF64, threshold: f64) -> Self {

Self {

meta: ParameterMeta::new(name),

metric,

threshold,

}

}

}

impl Parameter for MaxParameter {

fn meta(&self) -> &ParameterMeta {

&self.meta

}

}

impl GeneralParameter<f64> for MaxParameter {

fn compute(

&self,

_timestep: &Timestep,

_scenario_index: &ScenarioIndex,

model: &Network,

state: &State,

_internal_state: &mut Option<Box<dyn ParameterState>>,

) -> Result<f64, ParameterCalculationError> {

// Current value

let x = self.metric.get_value(model, state)?;

Ok(x.max(self.threshold))

}

fn as_parameter(&self) -> &dyn Parameter

where

Self: Sized,

{

self

}

}

mod schema {

#[cfg(feature = "core")]

use pywr_core::parameters::ParameterIndex;

use pywr_schema::metric::Metric;

use pywr_schema::parameters::ParameterMeta;

#[cfg(feature = "core")]

use pywr_schema::{LoadArgs, SchemaError};

use schemars::JsonSchema;

#[derive(serde::Deserialize, serde::Serialize, Debug, Clone, JsonSchema)]

pub struct MaxParameter {

#[serde(flatten)]

pub meta: ParameterMeta,

pub parameter: Metric,

pub threshold: Option<f64>,

}

#[cfg(feature = "core")]

impl MaxParameter {

pub fn add_to_model(

&self,

network: &mut pywr_core::network::Network,

args: &LoadArgs,

) -> Result<ParameterIndex<f64>, SchemaError> {

let idx = self.parameter.load(network, args, Some(&self.meta.name))?;

let threshold = self.threshold.unwrap_or(0.0);

let p = pywr_core::parameters::MaxParameter::new(self.meta.name.as_str().into(), idx, threshold);

Ok(network.add_parameter(Box::new(p))?)

}

}

}

fn main() {

println!("Hello, world!");

}Adding the schema definition to pywr-schema

The schema definition for the new parameter should be added to the pywr-schema crate.

Again, it is a good idea to follow the existing structure of the schema by making a new module for the new parameter.

Developers can also follow the existing parameters as examples.

As with the pywr-core implementation, the meta field is used to store the metadata for the parameter and can

use the ParameterMeta struct (NB this is from pywr-schema crate).

The rest of the struct looks very similar to the pywr-core implementation, but uses pywr-schema

types for the fields.

The struct should also derive serde::Deserialize, serde::Serialize, Debug, Clone, JsonSchema,

and PywrVisitAll to be compatible with the rest of Pywr.

Note: The

PywrVisitAllderive is not shown in the listing as it can not currently be used outside thepywr-schemacrate.

#![allow(dead_code)]

use pywr_core::metric::MetricF64;

use pywr_core::network::Network;

use pywr_core::parameters::{

GeneralParameter, Parameter, ParameterCalculationError, ParameterMeta, ParameterName, ParameterState,

};

use pywr_core::scenario::ScenarioIndex;

use pywr_core::state::State;

use pywr_core::timestep::Timestep;

pub struct MaxParameter {

meta: ParameterMeta,

metric: MetricF64,

threshold: f64,

}

impl MaxParameter {

pub fn new(name: ParameterName, metric: MetricF64, threshold: f64) -> Self {

Self {

meta: ParameterMeta::new(name),

metric,

threshold,

}

}

}

impl Parameter for MaxParameter {

fn meta(&self) -> &ParameterMeta {

&self.meta

}

}

impl GeneralParameter<f64> for MaxParameter {

fn compute(

&self,

_timestep: &Timestep,

_scenario_index: &ScenarioIndex,

model: &Network,

state: &State,

_internal_state: &mut Option<Box<dyn ParameterState>>,

) -> Result<f64, ParameterCalculationError> {

// Current value

let x = self.metric.get_value(model, state)?;

Ok(x.max(self.threshold))

}

fn as_parameter(&self) -> &dyn Parameter

where

Self: Sized,

{

self

}

}

mod schema {

#[cfg(feature = "core")]

use pywr_core::parameters::ParameterIndex;

use pywr_schema::metric::Metric;

use pywr_schema::parameters::ParameterMeta;

#[cfg(feature = "core")]

use pywr_schema::{LoadArgs, SchemaError};

use schemars::JsonSchema;

#[derive(serde::Deserialize, serde::Serialize, Debug, Clone, JsonSchema)]

pub struct MaxParameter {

#[serde(flatten)]

pub meta: ParameterMeta,

pub parameter: Metric,

pub threshold: Option<f64>,

}

#[cfg(feature = "core")]

impl MaxParameter {

pub fn add_to_model(

&self,

network: &mut pywr_core::network::Network,

args: &LoadArgs,

) -> Result<ParameterIndex<f64>, SchemaError> {

let idx = self.parameter.load(network, args, Some(&self.meta.name))?;

let threshold = self.threshold.unwrap_or(0.0);

let p = pywr_core::parameters::MaxParameter::new(self.meta.name.as_str().into(), idx, threshold);

Ok(network.add_parameter(Box::new(p))?)

}

}

}

fn main() {

println!("Hello, world!");

}

Next, the parameter needs a method to add itself to a network.

This is typically done by implementing a add_to_model method for the parameter.

This method should be feature-gated with the core feature to ensure it is only available when the core feature is

enabled.

The method should take a mutable reference to the network and a reference to the LoadArgs struct.

The method should load the metric from the model using the load method, and then create a new MaxParameter using

the new method implemented above.

Finally, the method should add the parameter to the network using the add_parameter method.

#![allow(dead_code)]

use pywr_core::metric::MetricF64;

use pywr_core::network::Network;

use pywr_core::parameters::{

GeneralParameter, Parameter, ParameterCalculationError, ParameterMeta, ParameterName, ParameterState,

};

use pywr_core::scenario::ScenarioIndex;

use pywr_core::state::State;

use pywr_core::timestep::Timestep;

pub struct MaxParameter {

meta: ParameterMeta,

metric: MetricF64,

threshold: f64,

}

impl MaxParameter {

pub fn new(name: ParameterName, metric: MetricF64, threshold: f64) -> Self {

Self {

meta: ParameterMeta::new(name),

metric,

threshold,

}

}

}

impl Parameter for MaxParameter {

fn meta(&self) -> &ParameterMeta {

&self.meta

}

}

impl GeneralParameter<f64> for MaxParameter {

fn compute(

&self,

_timestep: &Timestep,

_scenario_index: &ScenarioIndex,

model: &Network,

state: &State,

_internal_state: &mut Option<Box<dyn ParameterState>>,

) -> Result<f64, ParameterCalculationError> {

// Current value

let x = self.metric.get_value(model, state)?;

Ok(x.max(self.threshold))

}

fn as_parameter(&self) -> &dyn Parameter

where

Self: Sized,

{

self

}

}

mod schema {

#[cfg(feature = "core")]

use pywr_core::parameters::ParameterIndex;

use pywr_schema::metric::Metric;

use pywr_schema::parameters::ParameterMeta;

#[cfg(feature = "core")]

use pywr_schema::{LoadArgs, SchemaError};

use schemars::JsonSchema;

#[derive(serde::Deserialize, serde::Serialize, Debug, Clone, JsonSchema)]

pub struct MaxParameter {

#[serde(flatten)]

pub meta: ParameterMeta,

pub parameter: Metric,

pub threshold: Option<f64>,

}

#[cfg(feature = "core")]

impl MaxParameter {

pub fn add_to_model(

&self,

network: &mut pywr_core::network::Network,

args: &LoadArgs,

) -> Result<ParameterIndex<f64>, SchemaError> {

let idx = self.parameter.load(network, args, Some(&self.meta.name))?;

let threshold = self.threshold.unwrap_or(0.0);

let p = pywr_core::parameters::MaxParameter::new(self.meta.name.as_str().into(), idx, threshold);

Ok(network.add_parameter(Box::new(p))?)

}

}

}

fn main() {

println!("Hello, world!");

}Finally, the schema definition should be added to the Parameter enum in the parameters module.

This will require ensuring the new variant is added to all places where that enum is used.

The borrow checker can be helpful in ensuring all places are updated.

Contributing to Documentation

The documentation for Pywr V2 is located in the pywr-next repository, here in the

pywr-book subfolder.

The documentation is written using 'markdown', a format which enables easy formatting for the web.

This website can help get started: www.markdownguide.org

To contribute documentation for Pywr V2, we recommend following the steps below to ensure we can review and integrate any changes as easily as possible.

Steps to create documentation

- Fork the pywr-next repository

- Clone the fork

git clone https://github.com/MYUSER/pywr-next

- Create a branch

git checkout -b my-awesome-docs

- Open the book documentation in your favourite editor

vi pywr-next/pywr-book/introduction.md

Which should look something like this:

- Having modified the documentation, add and commit the changes using the commit format

git add introduction.md"

git commit -m "docs: Add an example documentation"

- Create a pull request from your branch

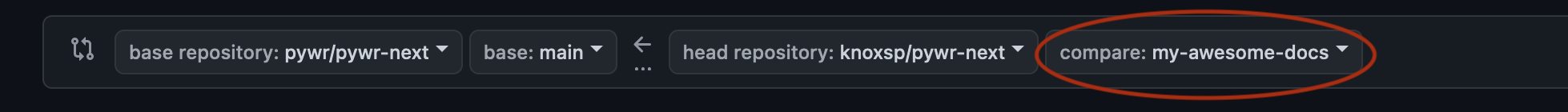

-

In your fork, click on the 'Pull Requests' tab

-

Click on 'New Pull Request'

-

Choose your branch from the drop-down on the right-hand-side

-

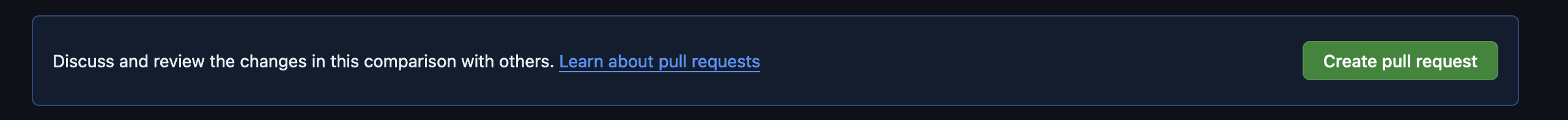

Click 'Create Pull Request' when the button appears

-

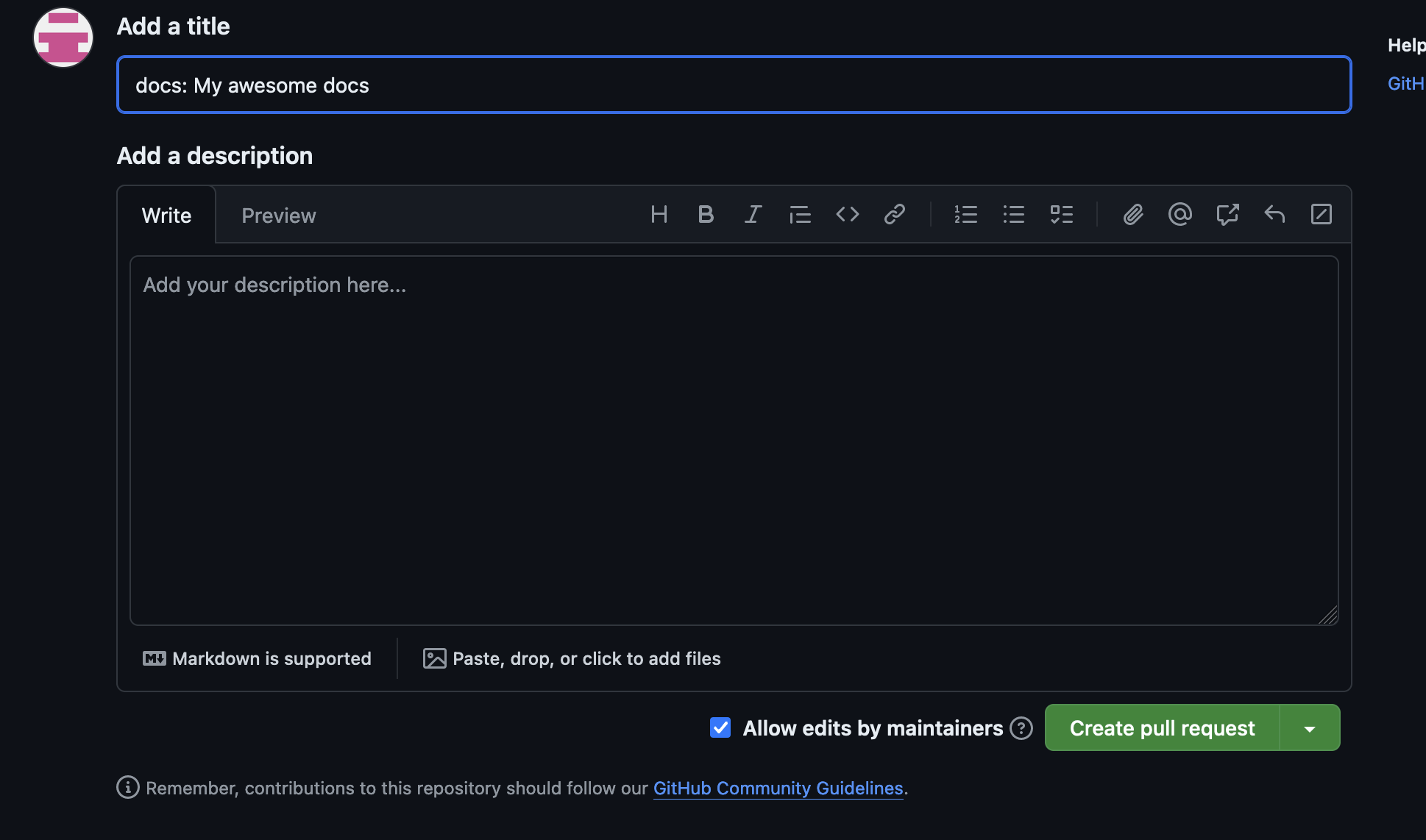

Add a note if you want, and click 'Create Pull Request'

-

Placeholder for API documentation

The API documentation is auto-generated by pdoc and should replace this file!